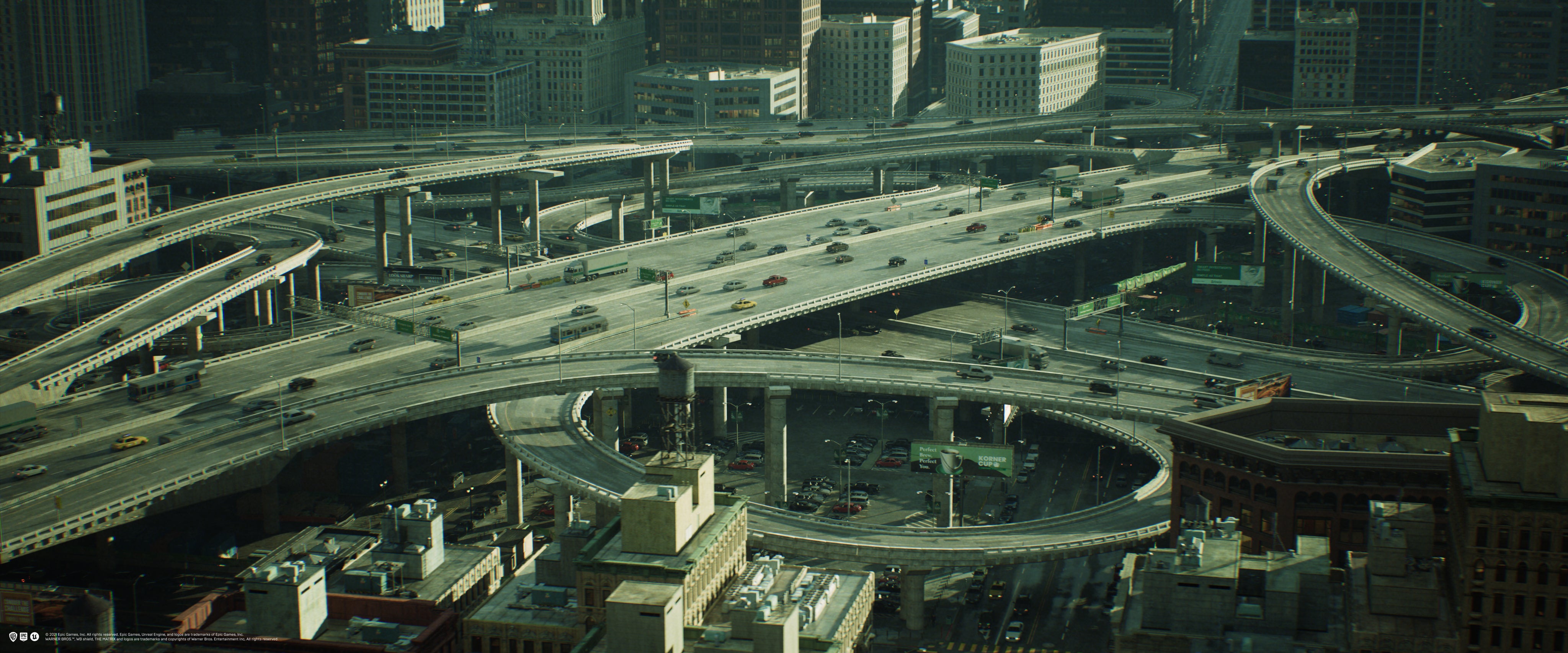

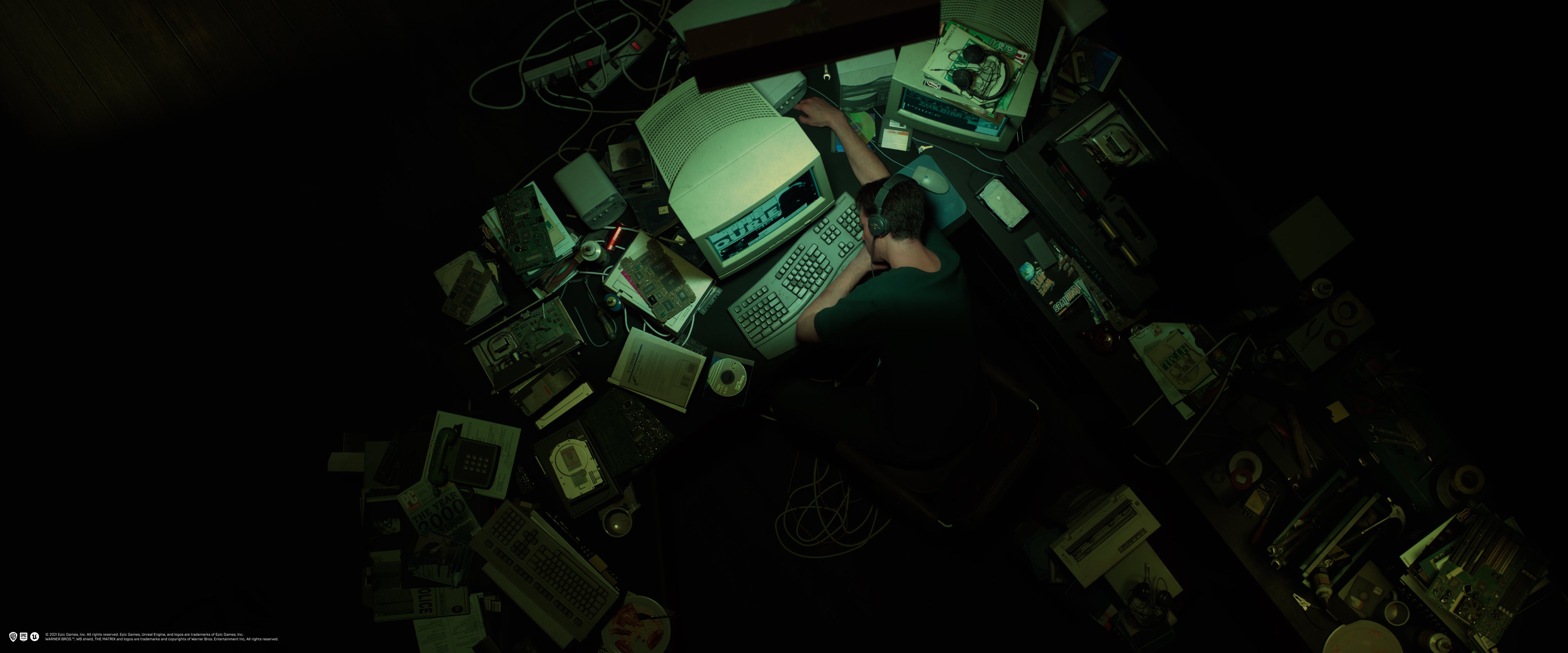

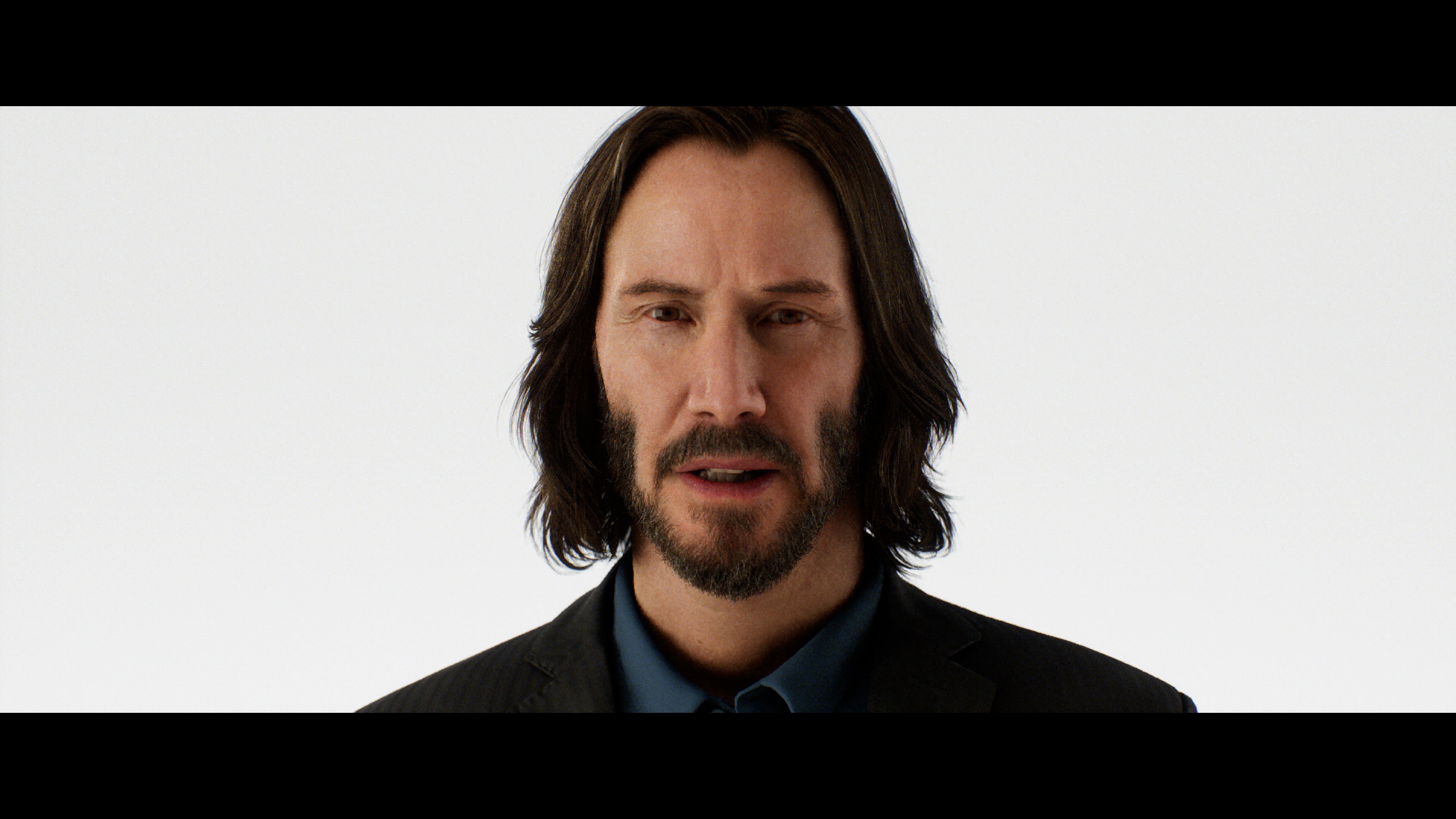

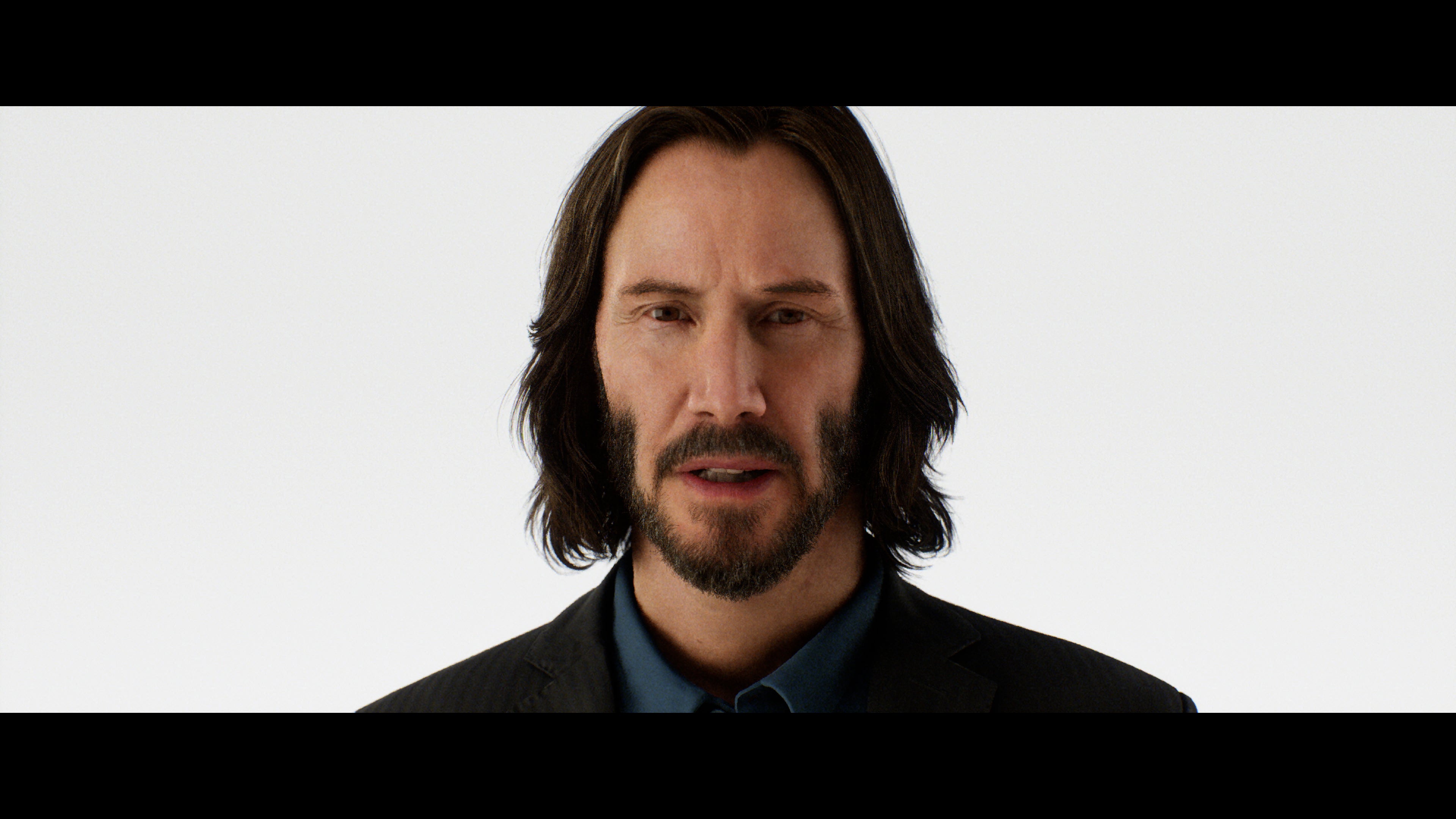

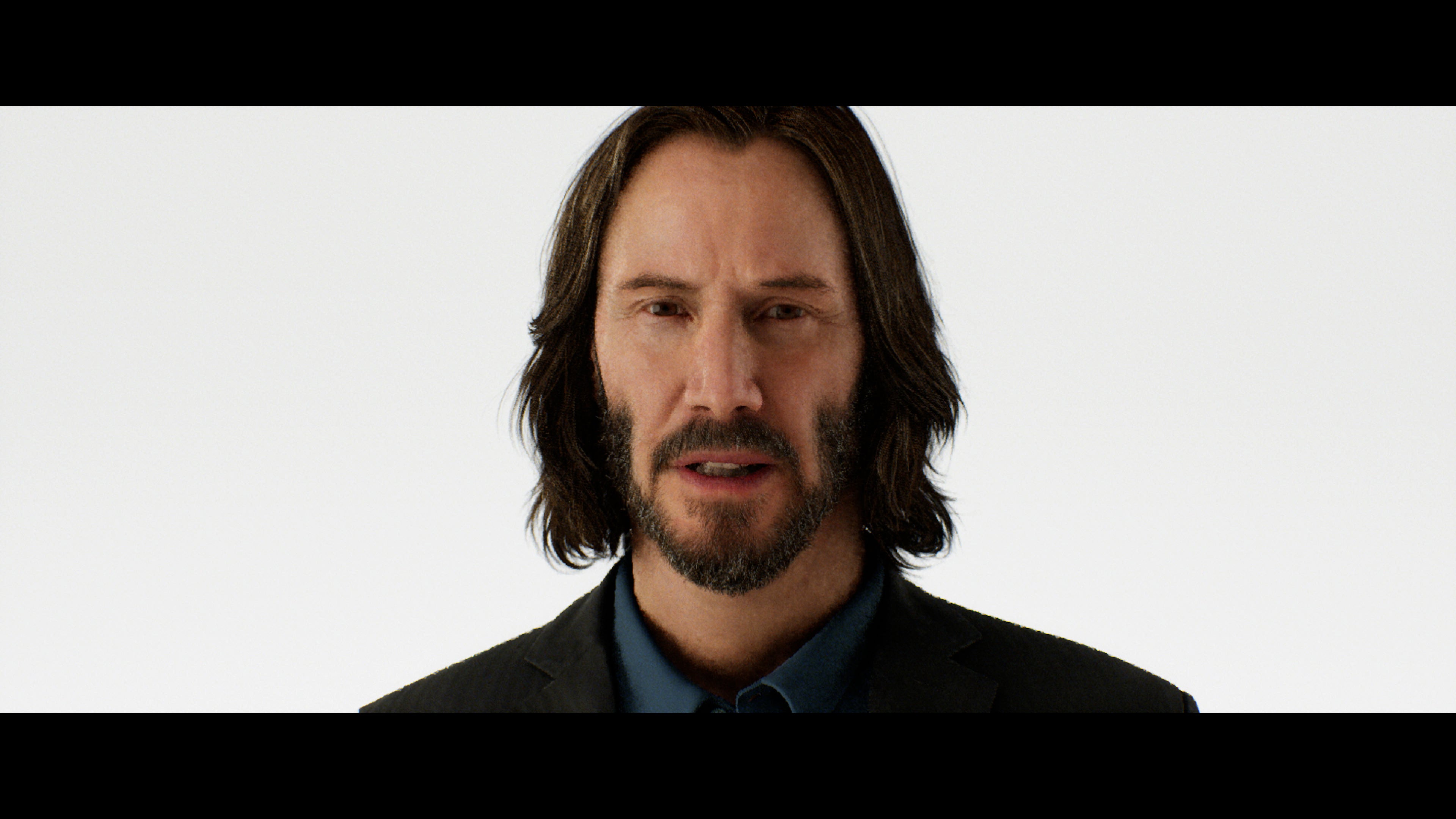

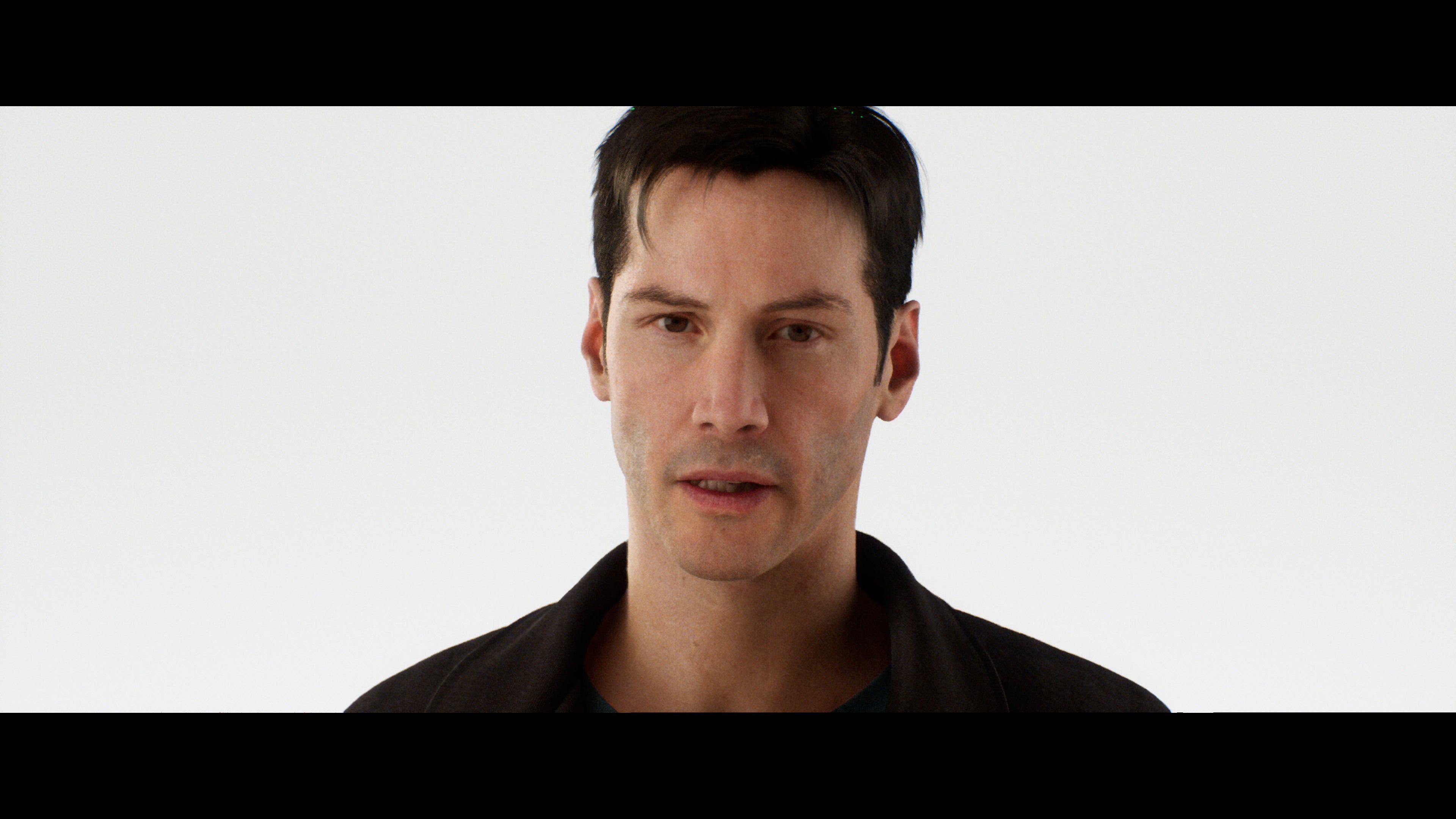

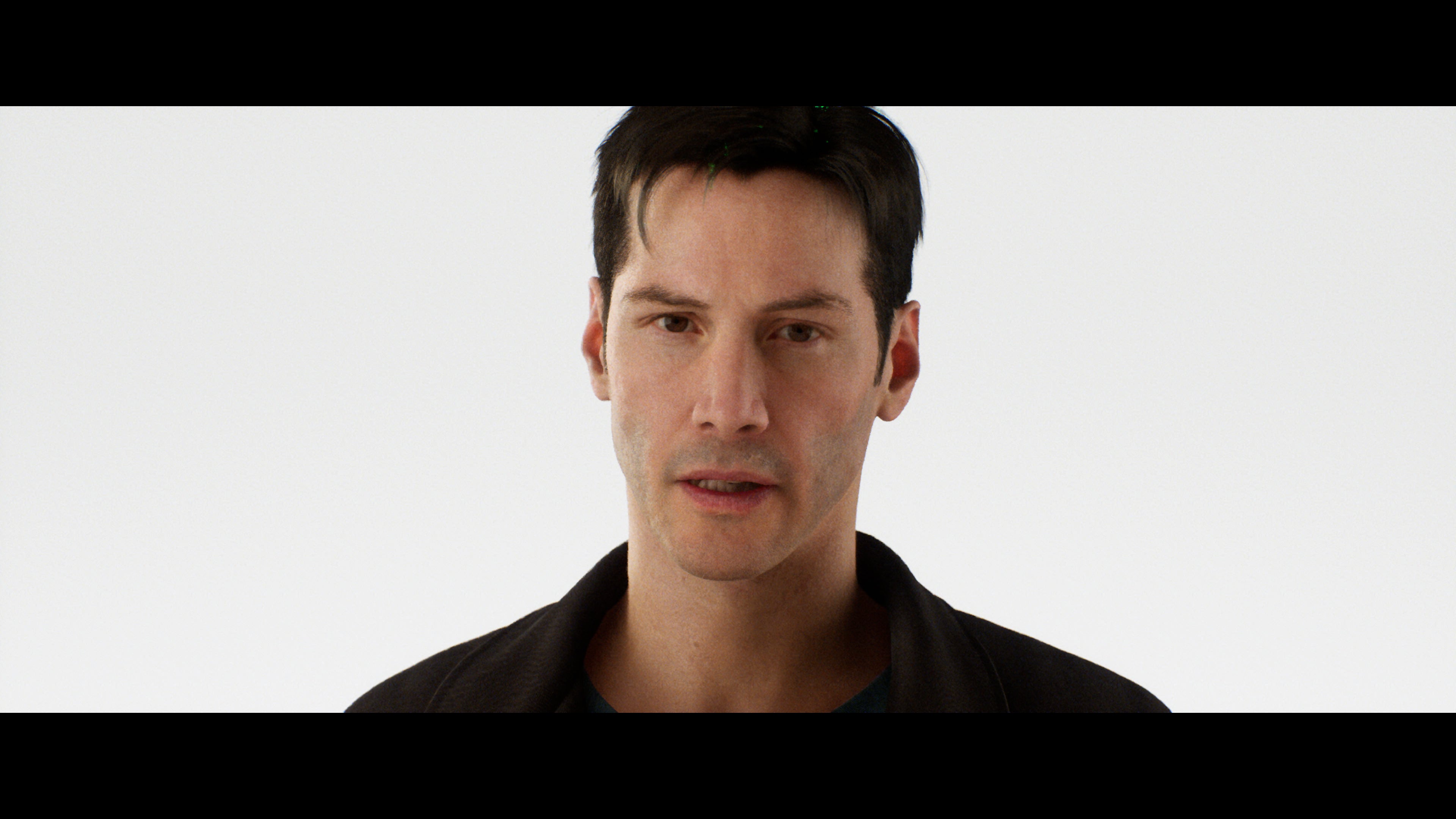

However, Epic’s objectives with this demo are many and varied, beyond the obvious visual spectacle. We spoke to key members of the firm’s special projects team to gain a better understanding of the full significance of this landmark release, discovering that the team has some serious pedigree, having made the original Reflections demo for the Nvidia Turing launch, using Star Wars: The Force Awakens assets to demonstrate hardware accelerated ray tracing. It’s the same group that worked on Lumen in the Land of Nanite - the stunning real-time demo that showed the world that the new consoles were capable of so much more. Valley of the Ancient followed, emphasising the quality delivered by the Nanite micro-polygon system and enhancements in Lumen-powered global illumination, and made available on PC. This team also worked with Lucasfilm, creating the remarkable LED wall used in the production of The Mandalorian’s virtual sets. For this talented group, The Matrix Awakens is a chance to validate Epic’s technology on the kind of mass scale we’ve not seen so far. It’s about wrapping it up and delivering it to the new generation of mainstream gaming consoles. “Let’s just release it, let’s release it to the public, right?” says Jeff Farris, the Technical Director of Special Projects. “[Let’s allow] developers and customers to put their hands on it and hold ourselves accountable for making sure that this tech is 100 percent ship-ready. And that’s what we pushed through to make sure that yes, you can put this on real world hardware - this level of graphics and this level of interactivity is achievable.” “We didn’t want to see a YouTube comment that says, oh, it’s running on a massive PC, it’s not a real PS5,” adds Jerome Platteux, Art Director Supervisor. “Yes, it’s on every next-gen console, including Xbox Series S.” The story of how this collaboration happened is fascinating, indicative of how rendering in both the gaming and motion picture spaces is converging. Epic’s CTO, Kim Libreri, came to the company after a highly accomplished career working on major Hollywood pictures including the Matrix trilogy, and kept in touch with Lana Wachowski. This opened the door to Epic gaining access to the IP and assets. And maybe that’s how the Matrix Awakens manages to deliver a shot-for-shot real-time remake of the classic scene where Neo is awoken by Trinity reaching out to him via his computer. It’s certainly how the iconic bullet-time scene is recreated in real-time - Epic had access to the original assets and actually increased the resolution on Neo himself, with UE5’s Chaos physics engine used to accurately map the slow motion movement of his coat. It’s also how the team were able to recreate the original Neo and Trinity even though they custom-scanned today’s Keanu Reeves and Carrie-Anne Moss for integration into their MetaHuman character rendering system. The whole concept of the Matrix megacity also fed into Epic’s objectives. With the prior UE5 demos, the idea of a massive open world with infinite view distances had been hinted at but never actually delivered. At the core of The Matrix Awakens is a procedurally generated, then customised, open world capable of being delivered by a very small development team. The city itself is based on real world assets (tailored and adjusted to avoid copyright concerns) and is effectively a mixture of New York, San Francisco and Chicago. This open world and indeed The Matrix Awakens’ main character - IO - aren’t really a part of Warner Bros IP, so this entire segment of the demo including the assets will be given away with the full release of Unreal Engine 5 in the spring of next year, along with tutorials for developers in how to build their own open worlds. “I’m really excited to see what the community is going to do with this, right?” says Jeff Farris. “I mean one of the big goals with the engine itself is to just facilitate creators. What’s the friction and how do we make it easy? You look at the tech like Lumen and Nanite, and that stuff really helps with that, but just releasing this to the community and letting people do what they’re going to do with it is amazing.” A core philosophy of Unreal Engine has been the democratisation of core technologies, but also in enabling much bigger projects from smaller studios - including Epic’s special projects team itself. “It’s a small team, so we don’t have the army of artists that comes with Fortnite,” adds Jerome Platteux. “So we wanted to prove to the world that we can generate a large scale city with a small team and the best approach is a procedural system. So that means we use Houdini extensively. This is where we create all the recipes for the world.” Houdini is a 3D software package with an emphasis on procedural generation. Platteux shows us the city in The Matrix Awakens, revealing that it defines the environment in terms of, say, the size of the roads and the heights of the buildings. When complete, the actual city itself is not exported, but rather a point cloud of just a few megabytes of data is generated, which is exported into Unreal. The Epic engine ingests the data into UE5’s ‘world processor’ tool. Here, the city can be further defined - the contours of the city, the grids of its road structures, the main artery onto which a freeway can be added. As the city is sculpted, the grid is filled with volume, with detail extracted from 10 million different assets. Streaming in everything may well sound nightmarish, but that’s where the world partition system comes into play, taking care of the background streaming. In the final portion of the demo, you’re free to explore the world and it does seem like fast traversal causes some issues, but this is part and parcel of ongoing development work, as Michal Valient, Director of Platform and Rendering Engineering explains: “The bottleneck, the hitches you see… it’s not the I/O, the I/O on the machines is really good. We still have some kinks to work out in the way we initialise data. So actually, yeah, it’s work for us to do.” Since the first debut of the Lumen in the Land of Nanite demo, there has been the perception that the mass bandwidth of the SSD in PlayStation 5 is what makes the Nanite system possible. However, the whole point of the virtualised texturing system used by Nanite is that it’s actually very lightweight in bandwidth - the only detail streamed in is that which is required onscreen at any given point. “This distinguishes it from traditional engines… [with Nanite] it’s very gradual,” says Michal Valient. “As you move around, it hovers at like 10MB per frame, because we stream bits of textures, bits of Nanite data… we stream textures or small tiles as you need them. As you render them, Nanite picks the actual little clusters of triangles you need to render that particular view. And we stream just that, so we don’t over-stream too much. And that actually allows it to be really swift when it comes to just I/O and that throughput.” [UPDATE: After publication, Epic asked for a correction here, the original piece quoted Michal Valient as saying the data throughput is 10MB/s - it’s 10MB per frame. At 30fps, this would be 300MB per second.] In addition to delivering the open world hinted at by prior Unreal Engine 5 demos, the Lumen real-time global illumination system is also significantly improved. Previously using an impressive, if limited software implementation, Epic has moved the system on a hardware-accelerated ray tracing solution - providing performance and extra fidelity in indirect and diffuse lighting. Hardware RT also opens the doors to realistic reflections and area light shadows. Just like a movie’s Director of Photography would place a light card next to an actor for improved lighting in a scene, Epic does this with its characters in The Matrix Awakens. “For example, when Trinity is in the car, and when you know the light doesn’t bounce because the seats are a bit too dark, we just add a white card [off-screen]. Lumen analyses the white card and you see that light bouncing back,” explains Jerome Platteux. Epic is also proud of its mass AI system. Within the demo, there’s a section immediately after the chase set-piece that showcases a range of technologies - and in one of those micro-demo segments, you can see the AI agents (pedestrians, cars) highlighted. “We wrote a new kind of high-performance scalable AI system here,” says Jeff Farris. “What you’re seeing in the demo here is 35,000 crowd members walking around, 18,000 vehicles simulated along with 40,000 parked cars. And [it’s not] a bubble around the character, like in the classic sort of way, right? We’re not culling based on view frustum or anything like that. I mean, there are some optimisations in there, obviously, but we track the whole thing. Let’s just stimulate the city to demonstrate the performance potential of this AI system.” What this translates to is a fully persistent population within the world - every entity can be tracked individually. Typically, in a traditional open world game, if you move away from the immediate area then come back, the pedestrians and traffic would be entirely different. That’s not the case here: Epic’s Mass AI system handles everything at scale. The only compromise to this is that the update rate of each agent varies according to its distance from the player. Even so, it’s hard to see time-sliced compromises even at distance based on our experiences. One of the key reasons that The Matrix Awakens can handle such a dense city is that detail supplied by the Nanite system is as close to ‘free’ as you’re going to get. One of the concerns about the system is that it may be limited to static geometry but the inclusion of traffic shows it is more dynamic. However, deformable meshes are still on the ’to do’ list, meaning that the solution for crash damage on vehicles is somewhat sub-optimal and can cause performance dips - essentially, affected vehicles become standard rasterised objects. “This is a result of the current limitations of Nanite, which only works on rigid objects. So this was a solution, which is very clever. The future is going to be Nanite, obviously, that’s where we are heading [but] at this time slice, we’re not there yet,” says Michal Valient. But what the team are looking to emphasise is how dynamic this system is and the opportunities this gives to developers. The Matrix Awakens was created by a relatively small team with around 20 to 30 people handling the assets and around 50 to 70 staff members in the team as a whole. Towards the end of the project, the project Slack channel increased to around 200 members as further personnel - such as marketing - entered the conversation. However, the process of creating the demo itself has led to significant optimisation to the point where if the project was rebooted from scratch, it could be achieved much more quickly. Jerome Platteux explains: “Now, we will be so much faster, we’re building the track when we are on the train itself - and sometimes we’re building the train at the same time.” Michal Valient adds that, “We’re building the train… and the train’s on fire.” And while the chase shoot-out system comes across as being separate and distinct to the open world exploration at the end of the demo, that’s simply not the case. “One thing I want emphasise is that there are no smoke and mirrors in the interactive chase sequence. All of that happens within this city. It’s all in the same map, all in the same city.. we created and shot the linear pieces within the same city.” “The process was actually interesting because [in a movie] you go to New York or San Francisco, and then you scout and you’re like, ‘oh, we can do a scene here… and then we can do a shot there, and then you go somewhere else.’ [With the open world] it’s actually pretty close to the way a film crew would go and start to film the city. You have tons of content, so you just find the right angle for that shot.” Similarly, there’s no major requirements for staging enormous set-pieces. The cars flipping during the shoot-out sequence is achieved simply through simulating a push through the Chaos physics system - “and off it goes,” says Michal Valient. “Yeah, none of those reactions are scripted,” adds Jeff Farris. “You shoot a tyre, we give it a little kick in physics and it just goes through.” What this means is that every play-through of the chase sequence produces different physical effects, the only constant being the collapse of the bridge at the end. This is still processed through the Chaos physics engine but it’s done offline, the output recorded and played back - similar to the way triple-A titles do it today for most major set-piece moments. The Matrix Awakens is effectively three different demos in one: an incredible character rendering showcase, a high-octane set-piece and an ambitious achievement in open-world simulation and rendering. However, perhaps the biggest surprise is that all of these systems co-exist within the one engine and are intrinsically linked - but with that said, it is a demo and not a shipping game. There are issues and performance challenges are evident. Possibly the most noticeable problem is actually a creative decision. Cutscenes render at 24fps - a refresh rate that does not divide equally into 60Hz. Inconsistent frame-pacing means that even at 120Hz, there’s still judder. Jumps in camera cuts can see frame-time spikes of up to 100ms. What’s actually happening here is that the action is physically relocating around the open world, introducing significant streaming challenges - a micro-level ‘fast travel’, if you like. Meanwhile, as stated earlier, speedy traversal and car crashes see frame-rate dip. Combine them and you’re down to 20fps. It’a at this point you need to accept that we’re still in the proof of concept stage. The performance challenges also impact resolution too. However, thanks to Unreal Engine 5’s temporal super resolution (TSR) technology the sub-native rendering on Xbox Series X and PS5 looks suitably 4K in nature. However, earlier pixel counts varying up to 1620p have to be revised in the wake of feedback from Epic. When content is letterboxed, the borders aren’t simply black overlays as is often the case - Epic is concentrating GPU power into the visible area, meaning that we’re actually looking at native 1066p-1200p rendering. In the most intense action, 1080p or perhaps lower seems to be in effect, which really pushes the TSR system hard. At the entry level, it’s incredible to see Xbox Series S deliver this at all but it does so fairly effectively, albeit with some very chunky artefacts. Here, the reconstruction target is 1080p, but 1555x648 looks to be the max native rendering resolution in letterboxed content with some pixel counts significantly below 533p too. It should be stressed that TSR can be transformative though, adding significantly to overall image quality, to the point where Epic allows you to toggle it on and off in the engine showcase section of the demo. Series S does appear to be feature complete, but in addition to resolution cuts, feature reduction in detail and RT does seem to be in play. [UPDATE: We’ve updated native resolution pixel counts on all systems.] But from our perspective, The Matrix Awakens is a truly remarkable demonstration of what the future of games looks like - and to be honest, we need it. While we’ve seen a smattering of titles that do explore the new capabilities of PlayStation 5 and Xbox Series hardware, this has definitely been the year of cross-gen. Resolutions are higher, detail is richer, frame-rates are better and loading times are much reduced - but what we’re seeing in The Matrix Awakens is a genuine vision for the future of real-time rendering and crucially, more streamlined production. The Matrix Awakens is free to download from the Xbox and PlayStation stores and it goes without saying that experiencing it is absolutely essential.